Beyond the Black Box: Why AI Transparency in Business Processes Matters

From claims handling to employee and customer onboarding to compliance checks, the adoption of artificial intelligence in critical business workflows is expanding. We've talked before about the allure of agentic AI and the need for a foundation of profound process understanding. As organizations work to find success with AI, a common yet critical question often arises: Is there a clear line of sight into how it was used and the decisions made?

For many companies looking to automate complex workflows involving contracts, financial records, customer onboarding, loan and insurance applications and claims processing – the answer is uncertain – and that poses significant risks.

As we’ve learned from recent regulatory developments and high-profile AI incidents, a black box approach to AI implementation will not be acceptable, especially for regulated industries where every decision must be auditable and defensible.

Without this, organizations open themselves up to:

- Potential regulatory fines and sanctions

- Failed audits that hinder operations

- Customer complaints and reputational damage

- Inability to identify and fix bias or errors

- Legal liability when decisions can’t be defended

If organizations don’t know what model was used, what was asked of it, what data it touched or how it shaped an outcome, then control is an illusion. And without control, you can’t have trust.

The Black Box Problem

In most cases, AI sits like a black box inside a workflow. Within this black box, information can be embedded in code so it’s not visible or configurable to the end user, or variables are scattered across different systems, buried in logs, stored in external platforms or the worst case – not captured at all.

This critical gap in visibility includes things like:

- What model was called?

- What prompt was used?

- Was the output based on real business data – or hallucinated?

- Did the AI suggestion influence the final decision?

The problem isn’t necessarily that the information doesn’t exist somewhere, it’s that it’s fragmented or in formats that aren’t easily audited.

If the answers to these questions aren’t clear and accessible, the process isn’t properly governed and will not meet compliance requirements. That’s a serious issue in industries like healthcare, insurance, finance or government – where compliance, auditability and fairness are non-negotiable.

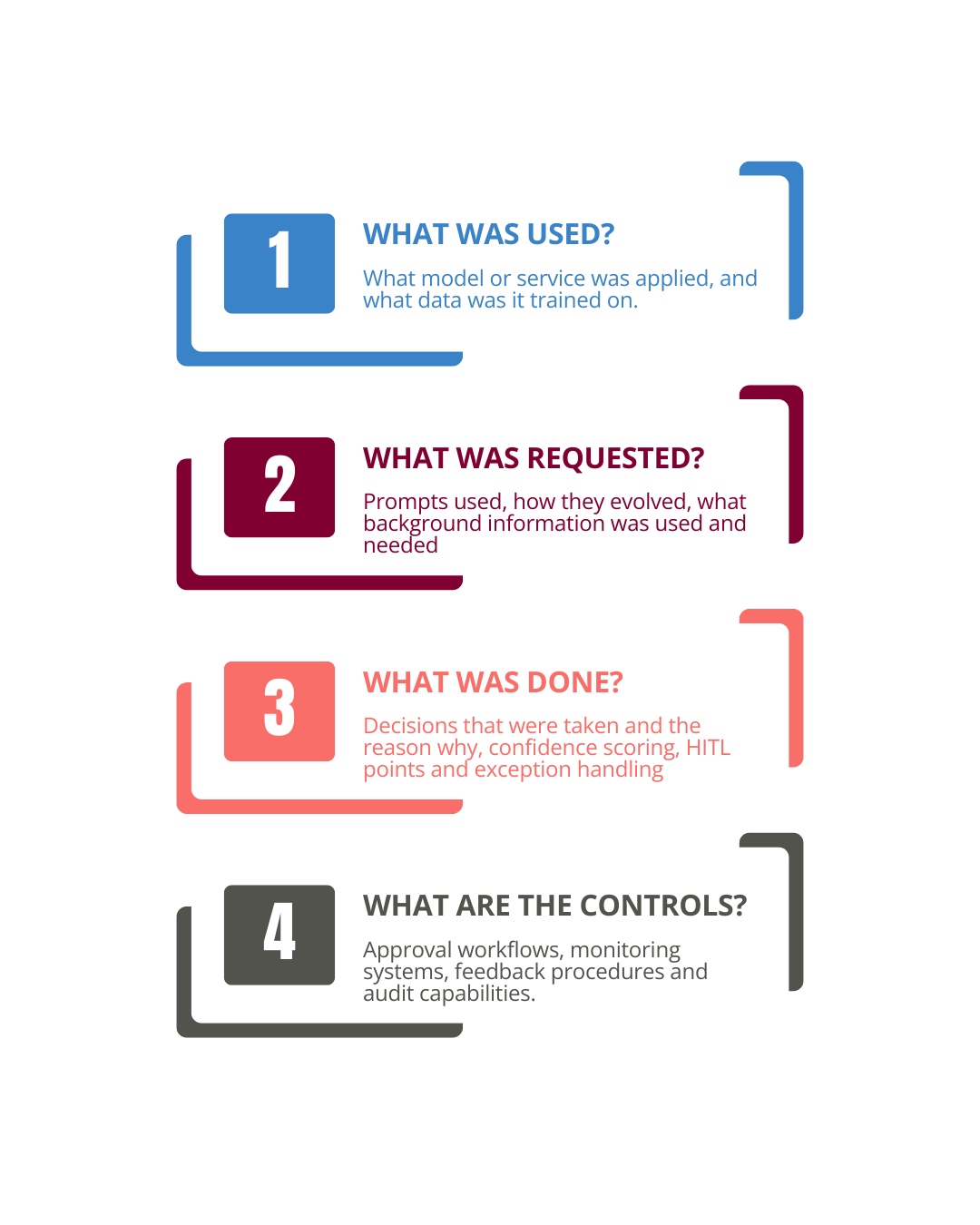

Four Pillars to AI Transparency

1. What was used? (Model Transparency)

Understanding your AI infrastructure requires maintaining a complete inventory of each AI component in your process ecosystem, and tracking how they evolve over time.

This includes:

- Specific model versions

- Training data lineage

- 3rd party dependencies

- Configuration details

Why this matters:

Without this level of detail, you’re operating with invisible dependencies that can shift without warning.

2. What was requested? (Query and Data Transparency)

The instructions given to AI systems determine their outputs. In document heavy processes, maintaining records of what was asked (and how it evolved) is essential.

This includes:

- Prompting decisions

- Context and grounding data

- Query evolution over time

- Parameter configurations

Why this matters:

If two different insurance claims receive different outcomes because they were processed with different prompts, showing exactly what the AI was instructed to do (and why) is the difference between a defensible process and a compliance failure.

3. What was done? (Decision Transparency)

Understanding how and why a decision was made is essential – every AI-driven action should create a clear, traceable record of the decision pathway.

This includes:

- Decision reasoning

- Confidence scoring & thresholds

- Human-in-the-loop thresholds

- Data points considered

- Exception and edge case handling

Why this matters:

If a customer challenges a decision, you need to demonstrate that it was based on legitimate factors that are consistently applied. When a regulator audits for bias, you need to prove which data points influenced decisions.

4. What are the controls? (Governance Transparency)

Perhaps most critically, organizations must maintain clear visibility into who can change AI configurations, performance monitoring and safeguards in place.

This includes:

- Change management & approval workflows

- Real-time monitoring and alerts

- Version control and rollback capabilities

- Audit trail completeness

- Access controls and duty separation

- Model validation and testing procedures

Why this matters:

Demonstrating that you have proper control over AI systems is a fundamental requirement in regulated industries. Systematic monitoring, validation and control mechanisms mean that issues can be quickly identified, impacts contained and stakeholders reassured that AI is responsibly managed.

From Black Box to Clear Trail

Regulated industries face an increasingly complex landscape of AI-related requirements. The EU’s AI Act, proposed U.S. federal guidelines and industry-specific regulations all point toward the same conclusion: AI systems must be transparent, accountable, and auditable. And it’s not just a compliance exercise, it’s a competitive advantage.

With robust AI visibility, organizations can:

- Respond faster to regulatory inquiries

- Improve AI performance

- Build stakeholder trust

- Reduce operational risk

The key is to ensure it’s available from the start and not trying to retrofit it afterwards.

The Way Forward: Transparency as a Strategic Asset

By building comprehensive AI visibility into systems and business processes, organizations don’t just meet regulatory requirements, they create more reliable, trustworthy automated workflows.

Companies that act now will find themselves better positioned to navigate the evolving landscape of AI governance while continuing to realize the efficiency gains that drew them to AI automation in the first place.

Stay tuned for our next post, where we’ll talk about the process-first path to ROI on your generative AI implementations.